Architecture¶

Overview¶

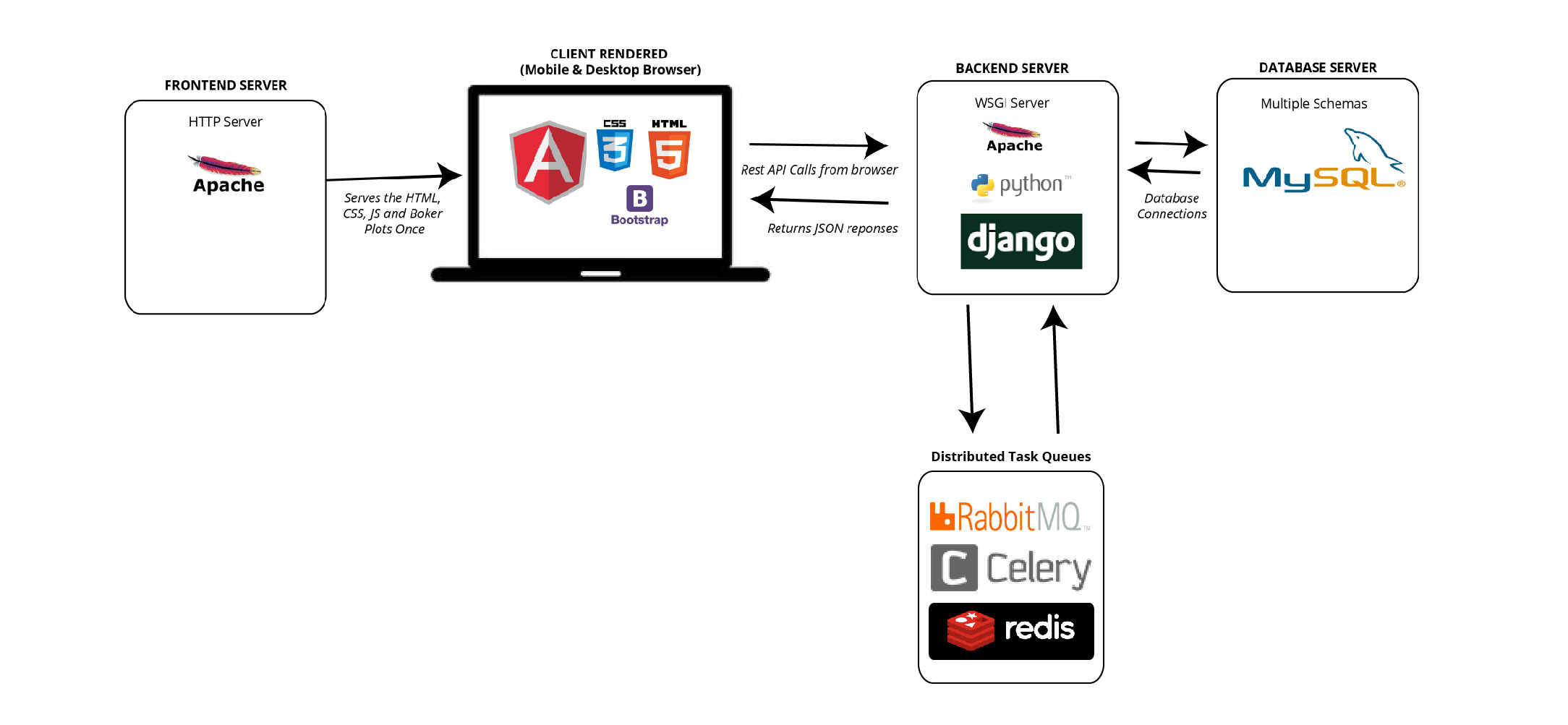

The Football rest API is implemented in Python using Django and Django rest framework. The application data is stored in the football MySQL server on AWS and interacts with the rest of the football database.

In order to keep to keep rest API response time reasonable and reduce the time to produce a player report, a distributed task queue called Celery is used. This allows us to keep slow tasks of the main Django thread and parrellelises the tasks need to be run to generate a report. This library also contains a task scheduler which is used to execute various tasks on a daily/weekly basis.

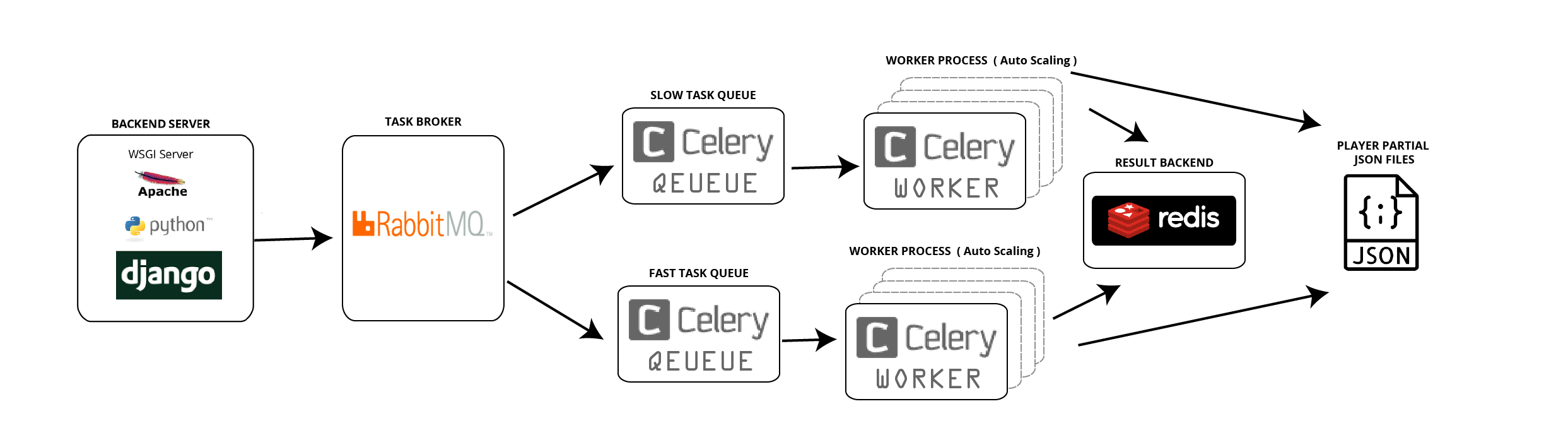

Distributed Task Queues¶

When the create-report api is called, multiple data tasks are generated for each player and sent to the RabbitMQ Broker. This then sends the tasks to the corresponding task queue. There are two queues, one accepts fast tasks and another one to accept slow (delayed) tasks. Fast tasks typically run under 10 seconds and all these need to be completed before a user is able to open the report. Whereas slow tasks run a lot longer and can run in background whilst a user viewing a report. Once the data is available, the report will update the next time the user opens it.

For each queue, there is a pool of worker processes that can pop tasks in parallel. Celery is set up to auto scale the pool of worker processes from 1 to 6.

The Redis server is used a result backend for Celery. As the task progresses and completes, the state and result is stored in the Redis DB. This can be used then to create tasks dependent on the completion and result from an earlier task. It is also useful to monitor the creation of a report as you can track the progress of all the tasks queued and running.

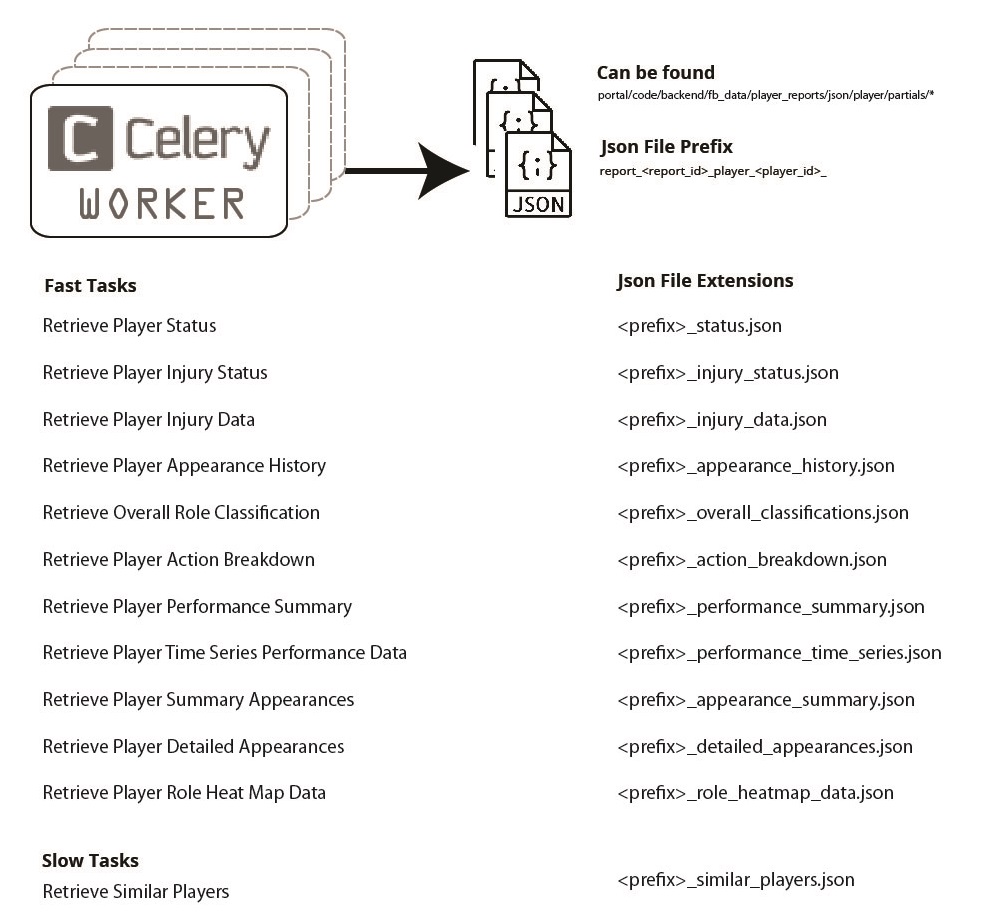

Player Report Generation¶

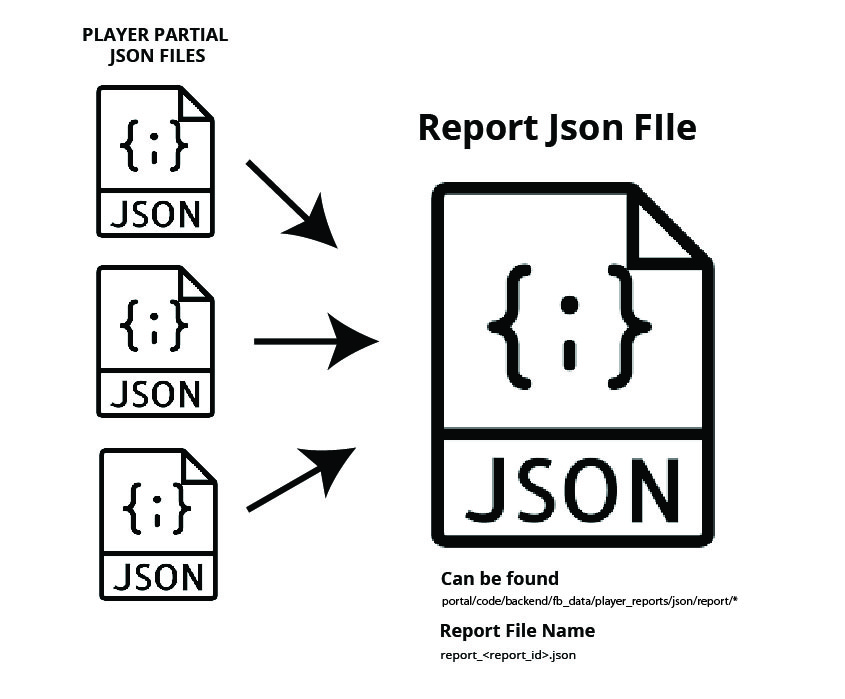

When a user generates a new report, currently the following fast and slows tasks are run for each player. Each task outputs a partial json file. These files contain data retrieved from the DB and processed in a format that can be easily consumed by the frontend.

After all the tasks complete and the user opens the report for the first time, Django combines and saves all the partial player json files into 1 big report json file. Future reads of the report will then use this file to enable faster access.

However if there are some outstanding slow tasks, the read request will continue to merge all the partial files rather than reading the single report json file.

Note: These json files should be stored on S3 rather than the local storage of EC2

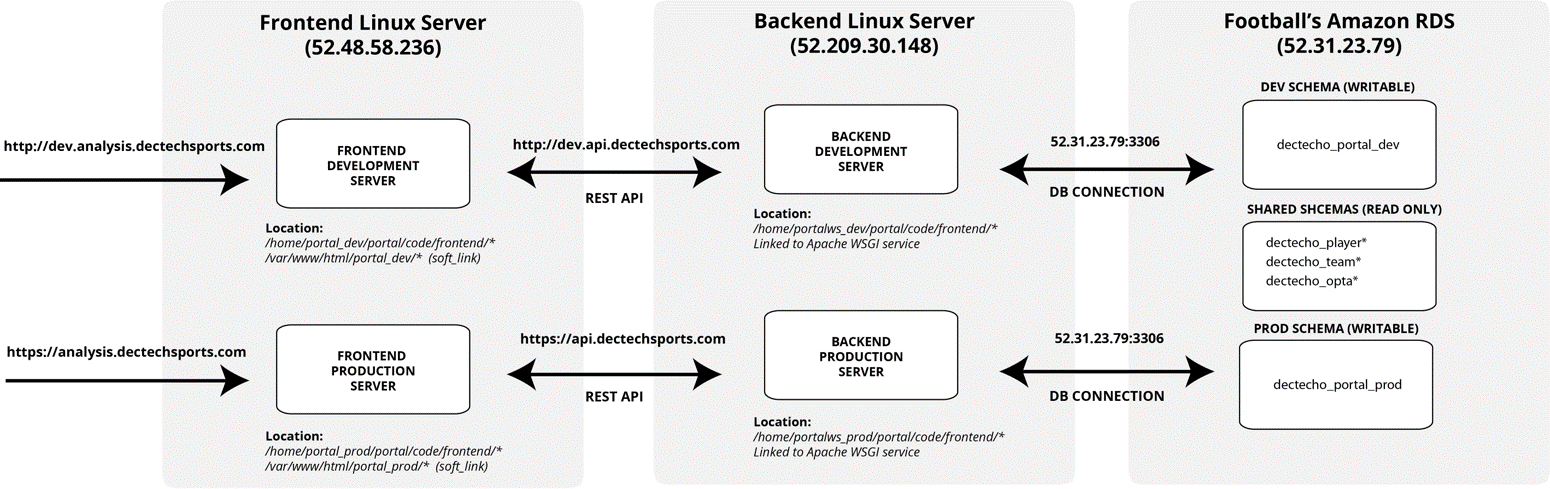

Servers¶

Both the Django application and the Celery tasks queues are housed on the same EC2 instance (52.209.30.148). The server is running Centos and the Django configured to be executed by Apache WSGI. The Football Database is running on a AWS RDS and can be accessed only by that EC2 at the this domain awsfootballdb.c5jpk39beict.eu-west-1.rds.amazonaws.com using 3306 as the port. The usernames and password can found on the Dev Team’s password manager.